When Amazon Web Services’s Route 53 went dark early Monday, the ripple effect was felt from gaming consoles in Tokyo to banking apps in São Paulo. The AWS DNS outage began at roughly 03:00 a.m. Eastern Time (07:00 UTC) in the Northern Virginia data‑center hub that powers the US‑EAST‑1 region. Within minutes, DNS queries that normally translate human‑readable URLs into IP addresses started failing, effectively putting a massive slice of the internet behind a locked door.

What Happened and Why It Mattered

The outage manifested as a cascade of resolution failures, first flagged by internal alarms on the DynamoDB API that underpins many of Route 53’s internal tables. As the service stalled, dependent workloads – from e‑commerce checkout pages to video‑conference bridges – saw “server not found” errors, even though the underlying servers remained perfectly healthy. In plain terms, the houses were standing, but the street signs vanished.

By 07:50 UTC, Downdetector (Downdetector) was logging an avalanche of reports – roughly 50,000 in total – with spikes for high‑profile platforms such as Coinbase, the battle‑royale hit Fortnite, and the United Airlines mobile app (United Airlines). The outage lingered for several hours, largely because DNS caching mechanisms held onto stale records until their Time‑to‑Live (TTL) values expired.

Technical Roots of the Outage

AWS engineers traced the failure to a misbehaving internal routing component that interrupted the communication between Route 53’s DNS resolvers and the DynamoDB back‑end. The precise trigger – whether a recent configuration change, an autoscaling edge case, or a network address translation glitch – was not fully disclosed, leaving many in the cloud‑ops community to speculate.

What’s clear is that the US‑EAST‑1 region, the most heavily trafficked AWS zone, acted as a single point of failure for a suite of services that rely on Route 53’s globally distributed name servers. When the site‑wide DNS lookup chain broke, every dependent endpoint felt the shock, illustrating how tightly modern applications are bound to a handful of hyper‑scale providers.

Who Was Affected

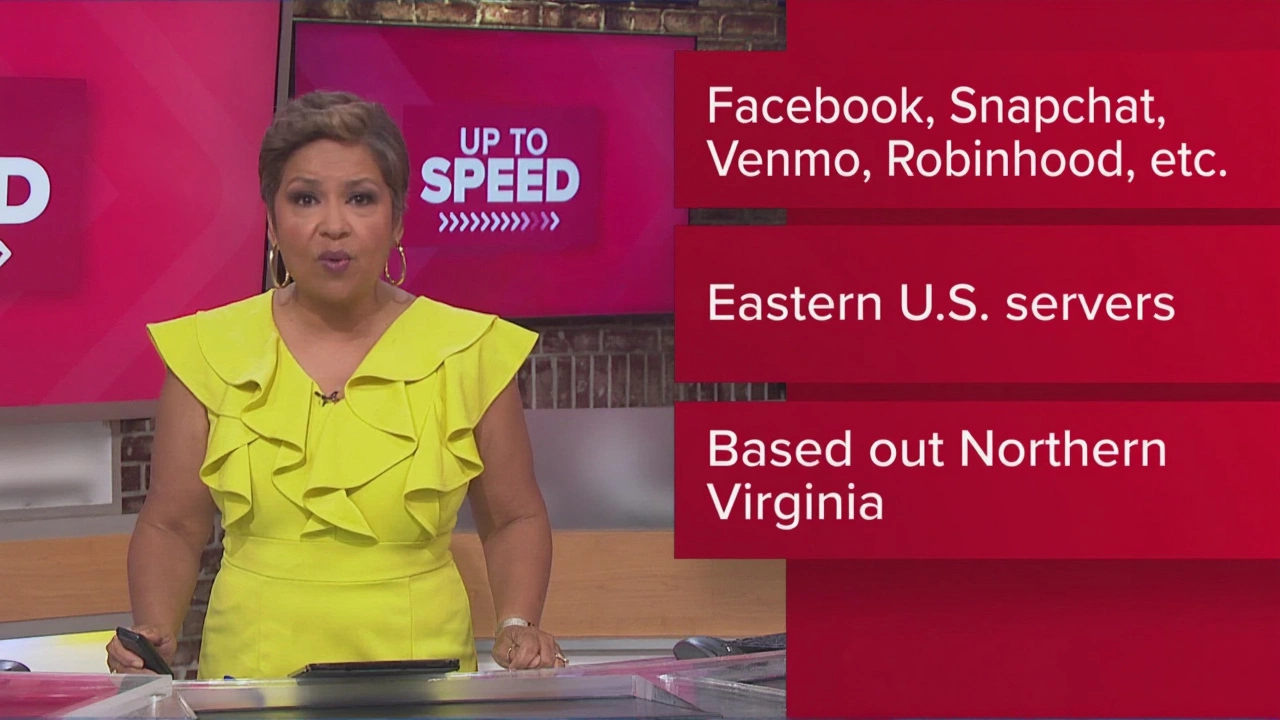

Beyond the headline‑grabbing names, the outage rippled through everyday tools. Millions of Zoom meetings stalled, Snap’s camera filters froze, Signal’s encrypted chats threw connection errors, and Venmo users saw delayed payments. Even smart‑home devices using Ring video‑doorbells reported “service unavailable” messages.

Financial services felt the pinch hardest. Several regional banks reported transaction timeouts, while crypto exchange Coinbase experienced a surge of failed API calls that rattled traders. Gaming platforms – Roblox, the Epic Games Store, and Pokémon GO – all posted status updates apologizing for missed battles and lost progress.

Industry Reaction and Lessons Learned

Amazon’s official blog posted a brief acknowledgment at 09:15 a.m. Eastern, declaring the incident “fully mitigated” by mid‑day. The statement, while reassuring, left many IT leaders craving more detail. In the hours that followed, analysts at WindowsForum.com warned that the episode underscores a “concentration risk” inherent to a cloud market where a few vendors power the majority of online traffic.

“Treat cloud outages as inevitable edge cases,” said a senior engineer who requested anonymity. “Build offline fallbacks, maintain independent health monitors, and rehearse failover playbooks.” The advice echoes best‑practice guides from the Cloud Security Alliance, which recommend multi‑region deployments and DNS‑level redundancy using secondary providers like Cloudflare or NS1.

Regulators in the EU and the U.S. are also taking note. A spokesperson from the U.S. Federal Communications Commission hinted that a formal review of large‑scale DNS failures may be on the agenda, especially as more critical infrastructure – from emergency services to voting platforms – leans on cloud‑hosted name resolution.

Looking Ahead: Mitigating Future Cloud Disruptions

For businesses that survived the outage, the takeaway is clear: diversify your DNS strategy. Deploying secondary resolvers outside the primary provider’s network can shave minutes – or even hours – off recovery times. Moreover, configuring shorter TTLs for critical records ensures that stale caches evaporate faster when a glitch strikes.

On the provider side, AWS has pledged to publish a post‑mortem detailing the exact chain of events. Industry insiders hope the report will include recommendations for more granular health checks on internal routing tables and stricter rollout controls for configuration changes.

Until then, the broader tech community is likely to revisit the old adage: “Don’t put all your eggs in one basket.” The 2025 AWS DNS outage may become a case study in universities, reminding the next generation of engineers that even the most robust clouds can stumble, and preparedness is the only safety net.

Key Facts

- Outage start: 03:00 a.m. ET (07:00 UTC), October 20 2025

- Primary failure: Route 53 DNS service in US‑EAST‑1 (Northern Virginia)

- Reported incidents: ~50,000 on Downdetector within the first two hours

- Majorly impacted services: Coinbase, Fortnite, Zoom, United Airlines, Ring, Snapchat, Venmo

- AWS declared full mitigation by approximately 12:30 p.m. ET

Frequently Asked Questions

How did the AWS DNS outage affect everyday users?

Users experienced “website not found” errors across a wide range of apps – from checking bank balances on Venmo to joining Zoom calls. Even if the underlying services were up, the broken DNS meant browsers couldn’t locate them, leading to missed payments, stalled meetings, and interrupted gaming sessions.

What caused the DNS failure in the US‑EAST‑1 region?

Initial analysis points to a malfunction in the internal routing path between Route 53’s DNS resolvers and its DynamoDB back‑end. AWS has not disclosed whether a recent configuration change, an autoscaling anomaly, or a network translation issue sparked the problem, but the fault lay in the DNS resolution layer.

Which high‑profile services were disrupted?

The outage hit cryptocurrency exchange Coinbase, the battle‑royale game Fortnite, video‑conferencing platform Zoom, Ring security cameras, Snapchat, Venmo, Roblox, and United Airlines’ mobile app, among many others.

What steps can businesses take to reduce impact from similar outages?

Experts recommend implementing secondary DNS providers, shortening TTL values for critical records, and building offline fallback mechanisms. Regular failover drills and multi‑region deployments can also limit the blast radius when a single cloud zone goes dark.

Will regulators impose new rules on cloud providers after this incident?

The U.S. Federal Communications Commission hinted at a review of large‑scale DNS failures, especially as more critical public services depend on cloud infrastructure. While no concrete legislation has been announced, increased scrutiny is likely.